Originally Published: November 2, 2012

How to design a high-performance website

Nearly three years ago, I wrote a short post about website performance, arguing that lightweight design would win out over heavyweight design.

High-fidelity interfaces, by their very nature, are bulky. Bulky design has no place in a future that will soon see mobile web outpace desktop web. Though improving, mobile web speeds aren’t broadband, and mobile device displays are nowhere near the pixel perfection offered by cinema monitors.

So how’s that heavyweight homepage working out for you? The audience running broadband on their oft-updated, recently-purchased rig probably loves your site. But for everyone else, maybe it loads slow. Maybe it doesn’t load at all.

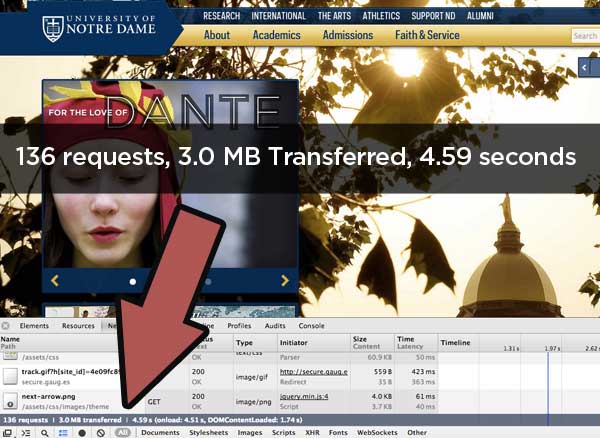

Despite my warning, the web has continued full-throttle toward heavyweight design.

Performance Matters

Website performance is essential to creating a positive user experience; nothing annihilates a visitor’s experience quite like slow-loading pages. Folks who don’t really care about your site will bounce faster than you can, well, say ‘bounce’. But if they have to use your website to accomplish some task, all that time wasted waiting for page to load will be converted into a serious grudge. Users will eventually despise you and your website.

Hefty websites continue to be churned out by professional design shops, which fail at performance because they use a PSD-driven workflow. Website design at most firms is literally pixel pushing and getting client sign-off before anything is built. When the look and feel is an exact quantity, the developer has to produce code that aligns seamlessly, regardless of the performance cost.

Responsive/adaptive design gives this conversation greater importance. When we design one site for many devices, there’s a risk that mobile users will be fed a hefty meal that takes minutes to download. If there was a 45-minute wait in line to get fast food at McDonald’s, would you stick around or just go to Burger King next door?

Designing for Performance

Designing for performance means changing your workflow. Get rid of layered PSDs and build an HTML/CSS functional prototype. Start with the overall look and feel, critical points of interaction, and then dive into detailed design work. Add JavaScript libraries only when you need to. As much as possible, merge your visual design and frontend development.

Throwing away the PSD is the most significant change that we need to make. As website designers steadily acknowledge the emerging responsive design ethos, it makes increasingly less sense to whip up PSDs for every possible device and resolution.

Over the last few years, my design workflow has evolved to this: Discussion, sketching, and prototyping. Clients tell me what they want to get out of a site, I generate ideas through sketching, and then settle on a consensus by building out a prototype. This process allows me to rapidly construct exceptional, UX-oriented design. Outstanding performance comes naturally with this workflow, because a functional prototype will not pass muster if it does not perform.

Improving Website Performance (A Technical View)

Website performance is a combination of two factors: Network latency and server latency. Network latency is the time a request takes to get to and from your server. Server latency is the time that request requires once it hits your server.

Strategies for Improving Network Latency

Reduce Number of Requests

Websites with fewer assets requests take less time to load.

Imagine that you’ve just returned home and your car’s trunk is full of groceries ready to be taken inside. Your groceries are in bags, and even though you’re strong, you don’t have the capacity to carry all the bags in at once, so you make multiple trips.

Your web browser behaves similarly. When talking to the server, several asset requests will occur simultaneously to reduce the number of trips that need to be made. But for a page with dozens of separate assets, there will be more back-and-forth time.

Use a Highly-Available DNS Service

When you load a website for the first time, your browser has to figure out that website’s IP address. The process looks like this:

- Talk to root name servers to determine the name servers for your TLD.

- Talk to your TLD’s name servers to get the authoritative name servers for your website.

- Talk to the authoritative name servers to get the IP address.

A highly-available DNS service reduces initial IP lookup time by pushing your DNS records to a series of DNS servers around the world. Instead of having to talk to a single authoritative DNS server in the United States to get your server’s IP address, a user in Europe will get this information faster because of the presence of a local authoritative DNS server.

Content Delivery Network (CDN)

CDNs like AWS CloudFront take your static assets and push them to edge servers positioned around the world. Because static assets account for the bulk of initial HTTP requests, this is advantageous as it considerably reduces the distance between the assets and a visitor’s computer. All serious websites and web applications use a CDN.

Strategies for Improving Server Latency

Once a request arrives, your server needs to send a response. Sometimes it’s easy and just means delivering a static asset like a CSS file or a flat HTML file. Other times, it means hitting an application which needs to perform expensive logic before eventually returning a response.

Because the sources of server latency vary wildly, I will not discuss apache vs nginx, database optimization, in-memory caching, or anything of that nature.

Output Compression

Output compression (typically gzip) deflates a response, resulting in generally improved load time. Think of this as crumpling a piece of paper into a ball before throwing it.

Local Caching

Most CMS and frameworks have options for page caching. Enabling local caching means that the application will write the response to disk and deliver this, up until the cache expires or is manually removed. Even though a request still hits your server, the response is faster because the database won’t be involved.

HTTP Caching

HTTP Caching (typically Varnish) is often used to front high-traffic websites and web applications. When your server responds to a request, it also sends an expiration header. For example, I might say that a particular page expires in 3600 seconds (60 minutes). Varnish listens to this header and caches the response, then delivers the cached response for subsequent identical requests up until the cache expires.

Performance gains through HTTP caching can mean going from 50-100 server requests per second to 25,000 Varnish requests per second without breaking a sweat. Though a CDN is still better for pure static assets, Varnish is quite powerful for application-driven responses that don’t change often.

Conclusion

Website and web application performance is an important topic that can be addressed from many angles. All projects will benefit from the adoption of a prototype-oriented workflow that allows designers and developers to observe performance as a concept is being produced. From a technical perspective, developers can employ a number of strategies to improve network and server latency, thereby reducing load times and keeping visitors happy.